Designing for Today’s Network of Intelligent Agents

AI agents are no longer isolated tools — they’re part of interconnected systems. Each has its own capabilities and degrees of autonomy. Some follow simple rules. Others adapt, predict, or operate independently.

But autonomy alone doesn’t create meaningful impact.

The real opportunity lies in designing the connective framework — the pathways and touchpoints that allow agents to collaborate, share context, and transfer responsibility seamlessly. In a well-designed system, each agent plays to its strengths, humans step in only when their judgment is essential, and the entire experience feels cohesive and purposeful.

Understanding the spectrum of autonomy

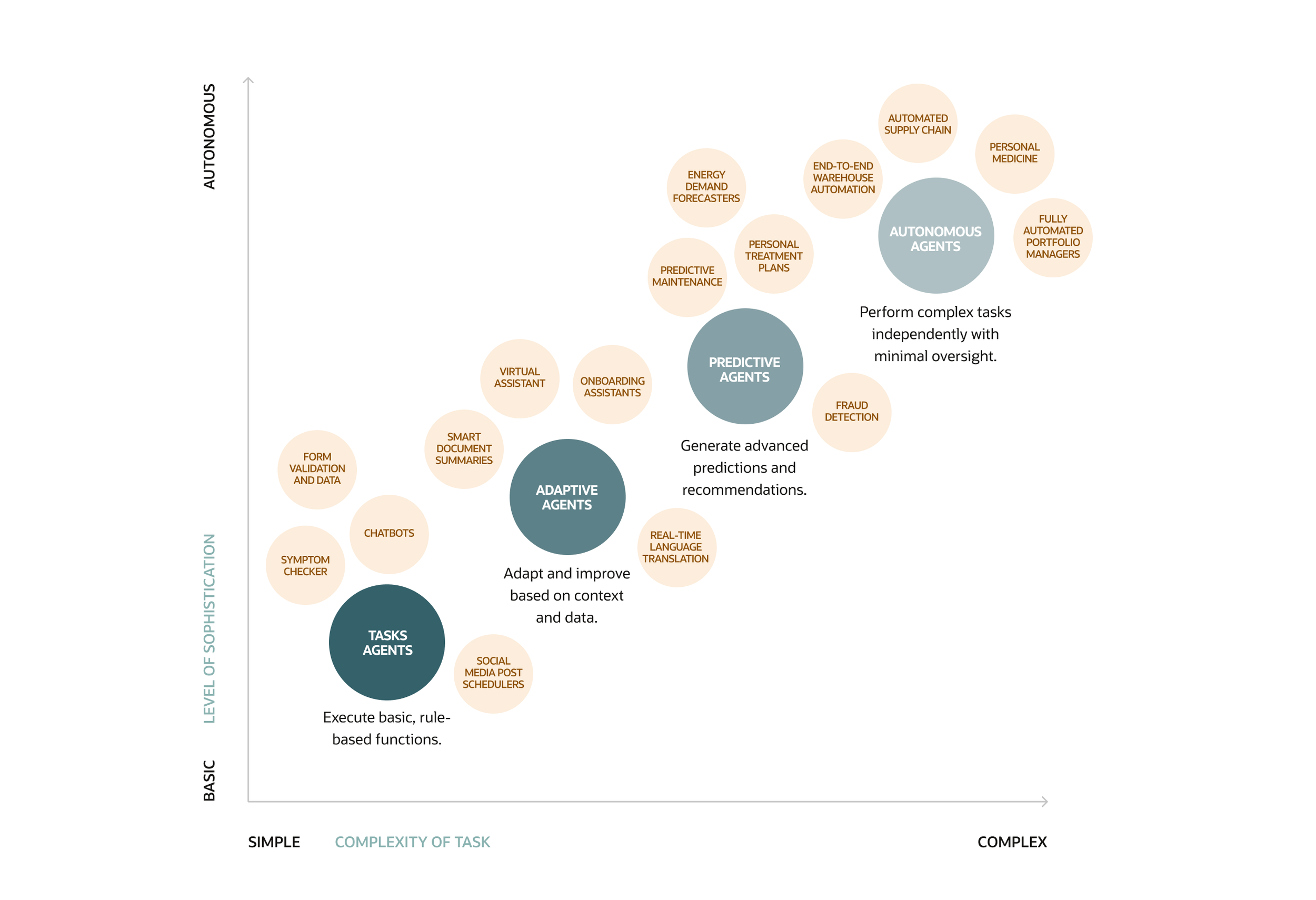

Not all agents have the same level of autonomy or automation. They exist along a spectrum—from tightly rule-based systems to highly independent actors. Each plays a role in the larger ecosystem—and not all are created equal.

Understanding their capabilities is the first step in coordinating them. The real design challenge is not just building powerful agents, but designing the collaboration and hand-off dynamics between them.

Here are four categories of autonomy that agents could fall on:

Task Agents – rule-based systems that handle repetitive processes such as data entry, report generation, or simple customer inquiries.

Adaptive Agents – systems that learn from context, like personalized content recommenders or dynamic workflow assistants.

Predictive Agents – tools that use data to forecast outcomes and flag potential issues, such as demand forecasting engines or predictive maintenance systems.

Autonomous Agents – highly capable systems that manage complex processes end-to-end with minimal human oversight, such as automated supply chain managers or self-optimizing resource allocators.

Designing collaboration, not just capability

The real design challenge isn’t simply to build powerful agents — it’s to shape the relationships between them. An individual agent might perform exceptionally well in isolation, but its true value emerges when it operates as part of a coordinated system. That means designing for interaction: when agents act in sequence to complete a process, when they work in parallel to speed outcomes, and when they know it’s time to pass the baton to a human.

This goes beyond workflow diagrams. It requires a shared framework for decision-making so each agent understands not only its own role but also the roles of others. It means creating clear protocols for how context and information flow between agents, ensuring they have the situational awareness to make better decisions. And it demands thoughtful consideration of human involvement — deciding where judgment, creativity, and empathy are indispensable, and making those moments intentional rather than incidental.

When collaboration is designed with the same care as capability, the result is an ecosystem where agents amplify each other’s strengths, reduce redundancy, and create a seamless experience that feels coordinated, purposeful, and human-centered.

Six methods for designing effective agent systems

In order to move from a collection of individual agents to a coordinated ecosystem, designers need to think beyond single interactions and focus on the relationships between agents. That means establishing a shared framework for how they work together, when humans get involved, and how information flows through the system.

The following six principles can guide designers to an effective agent orchestration:

Map human goals to the right agents

Start with the human objective, not the technology. What does the person need to achieve? Match that intent to the agent most capable of delivering on it, ensuring each one operates where it’s strongest.

Define predictable coordination patterns

Coordination isn’t random; it follows a structure. Design workflows that clarify when agents act in sequence, in parallel, or hand off to another agent or human. This keeps interactions smooth and efficient.

Ensure Transparency

Trust grows when people understand who—or what—is acting, why it’s acting, and what happens next. Always make it clear when a human will take over.

Design Modularly

All systems change over time. Build with flexibility so agents can be added, replaced, or upgraded without disrupting the whole. Think of it as creating an adaptable toolkit.

Engineer for Context

Even the most capable agent needs the right situational information to perform well. Provide relevant inputs, data, and cues to improve accuracy and effectiveness.

Plan for Resilience

Expect setbacks and design around them. If one agent fails, another should step in without interrupting the flow.

Together, these methods create systems where agents excel in their individual roles and strengthen each other through collaboration. They turn complexity into clarity, and technology into capability.

Human touchpoints as strategic interventions

In this kind of system, humans aren’t in the loop for every decision — they step in intentionally when their judgment, empathy, or creativity adds unique value.

This preserves efficiency, builds trust, and ensures technology augments rather than replaces human expertise.

When agent confidence levels vary, the system should make that variation explicit. Agents can communicate not just recommendations but also their confidence levels and reasoning.

A decision support layer might note: “Agent A is 95% confident in the diagnosis but only 60% confident in the treatment approach.”

This transparency acknowledges that human intuition, decision-making, and knowledge are needed to fill gaps where agent certainty is low.

Over time, the system can learn which types of decisions consistently benefit from human insight, refining hand-off triggers to keep human judgment focused on genuinely complex cases rather than routine uncertainty.

This approach transforms automation into augmented expertise — where agents operate as trusted collaborators, amplifying precision, speed, and scope while keeping the human at the heart of the experience.

Collaboration in action: Jordan’s story

Imagine a situation where Jordan, a project manager for a large commercial construction firm, is overseeing the final stages of a high-rise office build. Just weeks before the scheduled handover, several risks emerge that could delay completion and impact costs.

Predictive Agent analyzes project timelines, weather forecasts, supplier delivery data, and past project records. It flags a high probability of schedule slippage due to an upcoming stretch of severe weather and potential late deliveries from a key materials vendor.

Task Agent triggers contingency protocols: rescheduling certain outdoor activities, generating updated work orders, notifying subcontractors of timeline changes, and coordinating new delivery windows with suppliers.

Adaptive Agent reviews historical site data, labor availability, and equipment usage patterns. It recommends reassigning crews and adjusting shift schedules to focus on indoor work during the bad weather period.

Human Intervention – Jordan reviews the synthesized plan and notices that the AI recommendations don’t account for a city inspection already booked for an outdoor installation. She adjusts the schedule to bring that work forward, ensuring the inspection happens before the weather delay.

Autonomous Agent oversees the revised schedule, coordinates subcontractor activities, updates compliance documentation, and manages equipment rentals — all without manual intervention.

Continuous Learning Loop – Predictive agents monitor ongoing weather, supply chain, and labor data; adaptive agents refine strategies for weather-related delays; and task agents keep communication and documentation aligned in real time.

In a well-designed agent ecosystem, these moments of collaboration happen seamlessly. Every agent — regardless of type — feeds its learnings back into the system, creating a continuous loop that strengthens future planning, reduces waste, and ensures projects are delivered on time and on budget.

The future is a network, not a soloist

The future of our AI-driven experiences won’t be defined by a single brilliant agent.

It will be shaped by how well we design systems of agents to work together, complement each other’s strengths — keeping the human experience at the center.

As designers, our role is to create the conditions for that collaboration to thrive, building systems that are intelligent, adaptive, and deeply human at their core.

Related articles

Creativity is what keeps us relevant in the age of AI

Designing AI as a trusted collaborator in the user experience